Data Collection and Sharing for Program Evaluation and Performance Measurement

Jurisdictions need reliable data to evaluate the effectiveness of programming and implement evidence-based practice. Data collection systems must be accessible and produce data for performance measurement in order to determine whether sought outcomes are achieved. This in turn reinforces the use of evidence-based practices and builds additional accountability into service systems. Jurisdictions may develop various products -- including program evaluation designs, data collection systems, performance measurement systems, data security procedures, and data sharing agreements – to support this work.

Defining Program Evaluation Versus Performance Measurement

Program evaluation and performance measurement are two sides of the same coin, or two points on the same continuum.

Program evaluation is best understood as a focused assessment on the impact of a single intervention or cluster of interventions designed to impact a specific group of young people. Program evaluation is partially informed by outcomes of performance measures (see below), but also uses qualitative methods and additional quantitative methods to answer the questions of why the program experiences certain outcomes and what impact the program is having. For example, an agency that is using motivational interviewing may wish to see if their efforts match the effects of the intervention in the reported literature or in other jurisdictions, or even just to evaluate this intervention against their standard practices. Program managers may use feedback from evaluations to make critical changes to the program to enhance its effectiveness.

Performance measurement is usually a broader effort. Whereas program evaluation is often limited to a single intervention or service, performance measurement assesses activities and outcomes at a range of levels. Performance measures help us:

- understand what work activity is accomplished so we know if we’re meeting established goals or benchmarks;

- identify areas for improvement; and

- consider how to most efficiently allocate money and resources.

Using data for performance measurement can answer questions about the impact of individual workers, agencies, and organized systems of intervention at the local, regional (county, parish, judicial district), state and even national levels. When carefully implemented, performance measurement can help us compare the relative effectiveness of providers in achieving outcomes that make a difference for young people and their families.

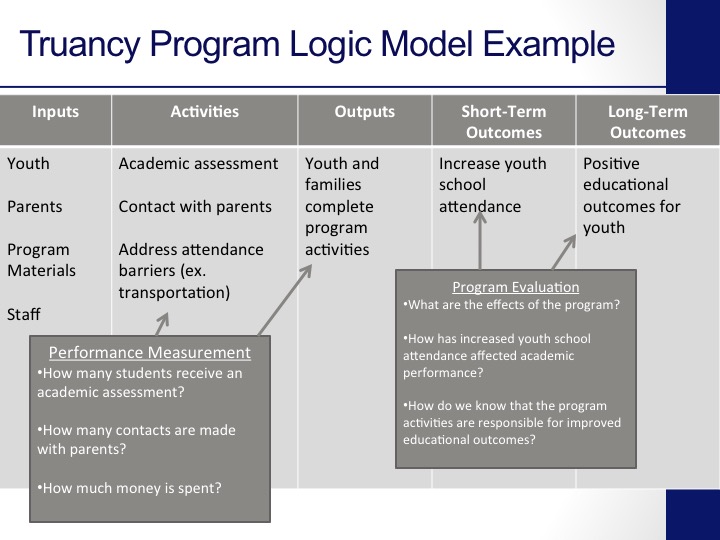

This logic model for a truancy prevention program includes both performance measurement and program evaluation questions:

Performance measurement questions focus on what is happening in the truancy program. They could include:

- How many students receive an academic assessment?

- How many contacts are made with parents?

- How much money is spent?

Program evaluation involves a more in depth analysis of the truancy program. Such questions could include:

- What are the effects of the program?

- How has increased youth school attendance affected academic performance?

- How do we know that the program activities are responsible for improved educational outcomes?

In the remainder of this Tool Kit, we discuss asking the right questions and creating an appropriate infrastructure for data sharing to support both program evaluation and performance measurement. Where a distinction between the two is necessary, we will make that explicit; otherwise the core principles are the same for both types of analysis.

Accountability

In juvenile justice, as in all of human services, there has been a growing demand for accountability in recent decades. A few reasons for this trend stand out. First, there is a sense that a “business case” must be made for public services. It is no longer assumed that all programmatic expenditures are the same and there is a major push for demonstrable outcomes. Second, as we have learned about the greater effectiveness of some interventions over others, there is a clear demand for wise use of every scarce dollar to yield maximum benefit.

Decades ago private industry and the federal government began reorienting their processes toward performance management (most popularly referred to as “management by objectives”). Health care adopted these practices under what is now commonly referred to as “continuous quality improvement.” The basic premise is that any enterprise should monitor its efforts to ensure that the enterprise is as effective (i.e., achieve the intended goals) and efficient (i.e., achieve those goals in such a way that the highest value is delivered for the lowest cost) as possible. These standards apply to any human services intervention.

The use of performance measurement and outcomes monitoring for child-serving agencies is rapidly increasing, and many states are using performance-based contracts to replace more traditional block grant awards to service providers. Benchmarks for success are specifically detailed in a contract for a specified period and the agency is expected to meet those benchmarks to qualify for continued reimbursement. For example, a juvenile justice or child welfare agency may be asked to reduce out-of- home placements for young people, with targets for reduction set based on data about prevailing rates of use of out-of-home placement. Other common outcomes focus on reduction in incarceration, school attendance and/or school performance,

Evidence-Based Practice

An additional driver of the movement for outcomes measurement has been the movement toward the use of scientifically validated methods to achieve desired goals. This is generally referred to as the “Evidence- Based Practices” movement.

Few terms are used more widely and yet more imprecisely than “evidence-based practices”, hereafter referred to as “EBPs”. Because there is a connection between EBPs, program evaluation and performance measurement and outcomes, it is worth clarifying where they overlap and where they are distinct.

The basic principle driving EBPs is that rigorous scientific work can identify specific ways of working with people -- be they juveniles, persons with psychiatric or substance use conditions, individuals with diabetes, etc. -- that have high a likelihood of success. If we know something works better than something else, why not use it?

The Institute of Medicine, a branch of the federal National Academy of Sciences, defines an evidence-based practice as “the integration of best research evidence with clinical expertise and patient values”.[1] In other words, it blends scientific understanding, the skills of the practitioner, and the culture and belief systems of the individuals being served. This definition has been adapted to a broad range of fields. In the behavioral health field, Drake and his colleagues have referred to evidence-based services as “clinical or administrative interventions or practices for which there is consistent scientific evidence showing that they improve client outcomes.” [2]

In determining whether an intervention is evidence based, evaluators attempt to determine the:

- degree to which program is based on a well-defined theory or model;

- degree to which target population received sufficient intervention (the “dosage”);

- quality of data collection and analyses procedures; and

- degree to which there is strong evidence of a cause and effect relationship between the program and the outcomes of the youth who participate in the program.

Is an “evidence-based practice” the same as a “promising practice”? The answer is “No” although the terms are often used interchangeably. The Washington State Institute for Public Policy (WSIPP) Updated Inventory of Evidence-based, Research-based, and Promising Practices provides the following definitions.

Evidence-based: A program or practice that has been tested in heterogeneous or intended populations with multiple randomized and/or statistically-controlled evaluations, or one large multiple-site randomized and/or statistically-controlled evaluation, where the weight of the evidence from a systematic review demonstrates sustained improvements in at least one of the following outcomes: child abuse, neglect, or the need for out of home placement; crime; children’s mental health; education; or employment. Further, “evidence-based” means a program or practice that can be implemented with a set of procedures to allow successful replication and, when possible, has been determined to be cost-beneficial.

Research-based: A program or practice that has been tested with a single randomized and/or statistically-controlled evaluation demonstrating sustained desirable outcomes; or where the weight of the evidence from a systematic review supports sustained outcomes as identified in the term “evidence-based” (the above definition) but does not meet the full criteria for “evidence-based. Further, ‘research-based’ means a program or practice that can be implemented with a set of procedures to allow successful replication.

Promising practices. A program or practice that, based on statistical analyses or a well-established theory of change, shows potential for meeting the “evidence-based” or “research-based” criteria, which could include the use of a program that is evidence-based for outcomes other than the alternative use.

An excellent resource for evidence-based practice with the juvenile justice population is the Juvenile Justice Information Exchange.

Three Basic Tenants: Simplicity, Utility and Relevance

There are three tenets to keep in mind as one builds any program evaluation and/or performance measurement system(s):

Simplicity. The more complicated the evaluation design, the more energy and process it will require to implement. Some very concrete implications flow from this tenet. When possible, always use data that is already collected and reported. If one must collect new information, select data elements that will answer more than one question or which will serve more than one purpose, and integrate the data collection into routine practice. Conducting surveys and completing special questionnaires is time and energy consuming for youth and their families as well as staff. Although it has implications for relevance and utility, it is worth noting that the more complicated and numerous the data elements are, the higher likelihood of errors; for a program evaluation or performance measurement to be really useful, it has to be credible, i.e., the data must be accurate.

Relevance. The most elegantly designed evaluation design or performance measurement system is not valuable if the data it yields flunks the “So what?” test. Seek only data that has genuine relevance for improving services to young people and the community. Be clear about the purpose of an evaluation or performance measure: Is the focus on improving the lives of clients? Increasing community safety? Reducing costs? It is important to engage key stakeholders in the development of your evaluation design or performance measurement system; if they aren’t committed to finding the answers to the questions, it becomes an empty exercise.

Utility. The real purpose of performance measurement and program evaluation is to continuously improve services. If there is no mechanism for providing data back to workers, to administrators, or other key stakeholders, then there is no point in starting. And the data has to be communicated in a timely way; if it takes a year to collect, analyze and report data, it is essentially useless. This requires one to be extremely precise in deciding what to evaluate or measure: a single EBP? Overall agency performance? Worker effectiveness? Each would present different challenges and probably require different approaches.

The Center for Disease Control[3] has developed this simple graphic to convey the same messages:

[1] Institute of Medicine. (2001). Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press.

[2] Drake, R.E., Goldman, H.A., Leff, H.S., Lehman, A.F., Dixon, L., Mueser, K.T., Torrey, W.C. (2001). Implementing evidence-based practices in routine mental health service settings. Psychiatric Services, 52(2), 179-182.

[3] Available at http://www.cdc.gov/eval/framework/index.htm